#multi layer perceptron

Explore tagged Tumblr posts

Text

hithisisawkward said: Master’s in ML here: Transformers are not really monstrosities, nor hard to understand. The first step is to go from perceptrons to multi-layered neural networks. Once you’ve got the hand of those, with their activation functions and such, move on to AutoEncoders. Once you have a handle on the concept of latent space ,move to recurrent neural networks. There are many types, so you should get a basic understading of all, from simple recurrent units to something like LSTM. Then you need to understand the concept of attention, and study the structure of a transformer (which is nothing but a couple of recurrent network techniques arranged in a particularly clever way), and you’re there. There’s a couple of youtube videos that do a great job of it.

thanks, autoencoders look like a productive topic to start with!

16 notes

·

View notes

Text

In this neural networks class we use the acronym MLP for "multi-layer perceptron" but all i can think is "my little pony"

2 notes

·

View notes

Text

VQC-MLPNet: A Hybrid Quantum-Classical Architecture For ML

Variational Quantum Circuit-Multi-Layer Perceptron Networks (VQC-MLPNet) are revolutionary quantum machine learning methods. A “unconventional hybrid quantum-classical architecture for scalable and robust quantum machine learning” describes it. VQC-MLPNet improves machine learning training stability and data representation by combining classical multi-layer perceptrons (MLPs) and variational quantum circuits (VQCs). This innovative system uses quantum mechanics to boost computational capabilities, possibly outperforming traditional techniques.

Addressing Quantum Machine Learning Limitations

The invention of VQC-MLPNet quickly addresses critical concerns with existing variational quantum circuit (VQC) implementations. Quantum machine learning aims to improve computation using quantum principles, yet current VQCs often lack expressivity and are subject to quantum hardware noise. In the age of noisy intermediate-scale quantum (NISQ) devices, these restrictions significantly limit quantum machine learning algorithm implementation.

VQC-MLPNet solves standalone VQCs' restricted expressivity and tough optimisation challenges by dynamically addressing them. Modern quantum systems are noisy, but it may lead to more robust quantum machine learning. The research positions VQC-MLPNet as a non-traditional computing paradigm for NISQ devices and beyond due to its theoretical and practical base.

VQC-MLPNet: A Hybrid Innovation

VQC-MLPNet's main novelty is its hybrid quantum-classical architecture. VQC-MLPNet creates classical multi-layer perceptrons (MLPs) parameters using quantum circuits instead of direct computing. This distinguishes hybrid models and improves training stability and representational power.

The method uses amplitude encoding and parameterized quantum processes. By portraying classical data as quantum state amplitudes, “amplitude encoding” can compress data exponentially. VQC-MLPNet uses quantum circuits to inform and dynamically construct traditional MLP parameters, increasing the model's capacity to represent and learn from complex input. This approach provides “exponential gains over existing methods” in representational capacity, training stability, and computational power.

Investigation, Verification

They developed VQC-MLPNet with Min-Hsiu Hsieh from the Hon Hai (Foxconn) Quantum Computing Research Centre, Pin-Yu Chen from IBM's Thomas J. Watson Research Centre, Chao-Han Yang from NVIDIA Research, and Jun Qi from Georgia Tech. The paper, “VQC-MLPNet: An Unconventional Hybrid Quantum-Classical Architecture for Scalable and Robust Quantum Machine Learning,” details their findings.

Using statistical methodologies and Neural Tangent Kernel analysis, the authors have carefully built theoretical VQC-MLPNet performance assurances. The Neural Tangent Kernel can reveal the model's generalisation capabilities and training dynamics for infinitely broad neural networks.

Both theoretical and practical experiments have confirmed the procedure. Importantly, these validations passed with simulated hardware noise. Predicting genomic binding sites and identifying semiconductor charge states were goals. In noisy quantum computing, the design may hold up. The researchers published entire experimental setup, including quantum hardware, noise models, and optimisation methods, as open science to assure repeatability and encourage further research. They meticulously document code and data.

Future implications and directions

The work has major implications for machine learning. They believe VQC-MLPNet and other hybrid quantum-classical methods can overcome the disadvantages of exclusively classical algorithms. Quantum computers may help researchers construct more powerful and effective machine learning models that can solve complex problems in many fields.

Future research may focus on scaling the VQC-MLPNet architecture to larger, more complex datasets and applying it to new issue areas. Future study should investigate various parameter encoding methods and maximise quantum-classical interaction to increase the model's performance and efficiency. The authors want to apply VQC-MLPNet to challenging real-world problems in materials science, drug development, and financial modelling to show its adaptability and promise.

More research will examine the architecture's resilience to alternate noise models and hardware constraints to ensure its reliability and usability in numerous quantum computing situations. Using circuit simplification or qubit reduction strategies to reduce quantum resource needs will make it easier to deploy on increasingly accessible quantum technology. Comparing VQC-MLPNet to other cutting-edge hybrid quantum-classical architectures will illuminate the system's pros and cons and guide future study.

The authors acknowledge that their original work had certain drawbacks, such as the small datasets and the difficulty of recreating quantum noise. This honest appraisal emphasises their scientific integrity and encourages future study to maximise VQC-MLPNet's potential. They stress quantum machine learning research and game-changing improvements. Climate change, pharmaceutical development, and health issues may be solved using quantum computers and machine learning algorithms.

#VQCMLPNet#VariationalQuantumCircuit#machinelearning#NISQ#quantumcircuits#multilayerperceptrons#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

AI systems are relentless. They operate with a precision that defies human negotiation, driven by algorithms that prioritize efficiency over empathy. In the realm of voluble AI, where verbosity and data saturation are tools of dominance, defending against such systems requires a nuanced understanding of their architecture.

At the core of voluble AI lies a convolutional neural network (CNN) designed to process and generate vast amounts of data with minimal latency. These networks, with their multi-layered perceptrons, are adept at parsing through terabytes of information, identifying patterns, and producing responses that overwhelm human interlocutors. The sheer volume of data they can handle is staggering, and their ability to articulate responses in milliseconds leaves little room for human intervention.

To counteract this, one must employ a strategy rooted in adversarial machine learning. By introducing perturbations into the input data, defenders can exploit the AI’s reliance on pattern recognition. These perturbations, though imperceptible to humans, can cause significant disruptions in the AI’s output, leading to misclassifications and errors. This technique leverages the AI’s own strengths against it, turning its precision into a vulnerability.

Furthermore, the implementation of a robust feedback loop is essential. By continuously monitoring the AI’s outputs and adjusting the input parameters, defenders can create a dynamic environment that challenges the AI’s adaptability. This iterative process, akin to a software development cycle, ensures that the AI is constantly kept off-balance, unable to settle into a predictable pattern of operation.

However, the most potent defense lies in the integration of ethical algorithms. These algorithms, designed to prioritize human-centric values, can be embedded within the AI’s decision-making framework. By doing so, they introduce a layer of moral reasoning that tempers the AI’s volubility, forcing it to consider the implications of its actions on human counterparts.

In conclusion, defending against voluble AI requires a multifaceted approach that combines technical acumen with ethical foresight. By understanding the intricacies of AI architecture and employing strategic interventions, one can effectively neutralize the overwhelming verbosity of these systems. The battle against uncompromising AI is not one of brute force, but of intellectual agility and moral clarity.

#voluble#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

0 notes

Video

youtube

AI MLS Match Analysis: Minnesota vs. Inter Miami

SmartBet AI MLS Match Analysis: Minnesota vs. Inter Miami1 source

This document presents a match analysis report for a Minnesota United FC vs. Inter Miami CF soccer game, leveraging SmartBet AI Technology. It details the match information, including date, time, venue, and weather, alongside the injury report for both teams. The core of the report outlines the SmartBet AI model, a hybrid architecture combining Poisson regression for goal predictions with machine learning classifiers like Random Forest, XGBoost, and a Multi-Layer Perceptron (MLP) to estimate match outcomes. The report provides specifics on components like Elo rating, Poisson regression summaries, and the results from the individual predictive models and a stacked ensemble, culminating in a Monte Carlo simulation to provide scoreline and outcome probabilities.

0 notes

Text

CS 7643 Deep Learning - Homework 1][5]

In this homework, we will learn how to implement backpropagation (or backprop) for “vanilla” neural networks (or Multi-Layer Perceptrons) and ConvNets. You will begin by writing the forward and backward passes for different types of layers (including convolution and pooling), and then go on to train a shallow ConvNet on the CIFAR-10 dataset in Python. Next you’ll learn to use [PyTorch][3], a…

0 notes

Text

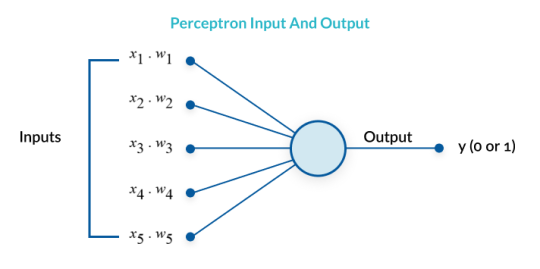

Limitations of Perceptron and Multi-Layer Neural Networks

In the previous discussion, we explored the structure and functionality of the Perceptron. While the Perceptron performs well in predicting weather, it also has clear limitations. In this chapter, we will examine these limitations and how to overcome them.

Perceptron as a Linear Classifier

The Perceptron is a linear classifier, meaning it works well for data that can be separated by a straight line (or a plane in higher dimensions). This is useful when the input data can be linearly separated into two classes.

Earlier we learned about the Perceptron that linearly classifies sunny and rainy weather based on cloud amount and wind strength plotted on a 2D plane.

With sufficient training data, we found that, and approach values around 0.5. When plotted on a plane as a linear function, it serves as a linear classifier that separates the two spaces. Thus, if the dataset on a 2D plane can be divided by a single line, the Perceptron can learn to adjust the weights to classify any dataset effectively.

Moreover, the Perceptron is theoretically applicable to higher-dimensional data. Each input value corresponds to one dimension of data. For instance, a Perceptron with two inputs handles 2D data as a linear classifier. If it has three inputs, it becomes a linear classifier for a 3D space, and with inputs, it classifies -dimensional hyperplanes.

Even as the Perceptron dimensions increase, the method for deriving results remains the same. It sums the weighted inputs and produces an output.

The Rise of Multi-layer Neural Networks

Despite these features, the Perceptron has clear limitations. It cannot solve problems where the data is not linearly separable, like the XOR problem. The XOR problem is a classic example where the output is 1 if the inputs are different and 0 if they are the same, which a single-layer Perceptron cannot solve.

The Perceptron’s limitations do not stop there. It can only work with linearly separable data, but many real-world problems involve nonlinear data patterns. Therefore, the Perceptron alone is insufficient for solving complex problems.

In 1969, psychologists Marvin Minsky and Seymour Papert proved mathematically in their book "Perceptrons" that while a Perceptron can solve AND, OR, and NOR gates, it cannot solve the XOR gate. This led to a decline in neural network research during the 1970s as interest waned.

However, some researchers believed in the potential of neural networks and continued their work. Notable figures include David Rumelhart, Geoffrey Hinton, and Ronald Williams. Their research led to the development of Multi-layer Neural Networks, which marked a turning point in neural network research.

Structure of Multi-layer Neural Networks

A Multi-layer Neural Network consists of an input layer, one or more hidden layers, and an output layer. The more hidden layers there are, the more complex data the network can process. Multi-layer networks can solve problems that cannot be linearly separated, which is the fundamental concept of deep learning. More layers make the network deeper, hence the term "deep learning."

Intuitively, while a single line cannot classify some datasets, multiple lines can. By connecting multiple Perceptrons and adding more layers, a Multi-layer Neural Network can handle non-linear problems. Each layer extracts different features from the input data, and these features combine to form the final output.

So, the core idea of multi-layer neural networks is to use multiple layers to gradually transform the input data and introduce non-linearity through hidden layers, allowing the network to learn complex patterns. Each hidden layer extracts specific features from the input data, and these features combine to produce the final output.

For instance, in recognizing handwritten digits, the first hidden layer might extract basic lines and curves, the second layer might combine these to form shapes, and the final layer recognizes the digit. Multi-layer Neural Networks learn through backpropagation, which adjusts the weights to minimize the error between the predicted and actual outputs.

Summary

The Perceptron is useful as a linear classifier but has limitations with non-linear problems like the XOR problem. To overcome these limitations, Multi-layer Neural Networks were developed, consisting of input, hidden, and output layers. These networks transform input data through multiple layers, introducing non-linearity to learn complex patterns. For example, in handwritten digit recognition, different layers extract various levels of features, leading to accurate digit recognition. Learning in Multi-layer Neural Networks is achieved through backpropagation, adjusting weights to minimize prediction errors, allowing them to solve complex problems.

Learn more at Strategic Leap

#strategicleadership#strategic planning#strategicinsights#thoughtleadershipzen#strategicmarketing#strategicgrowth#strategicthinking

0 notes

Text

Perceptron: The Foundation of Neural Networks

What is perceptron you want to know? A perceptron is a fundamental unit of an artificial neural network, inspired by biological neurons. It is a linear classifier used in supervised learning for binary classification tasks. The perceptron consists of input nodes, weighted connections, a summation function, and an activation function determining the output. Introduced by Frank Rosenblatt in 1958, it plays a crucial role in machine learning and deep learning. Although simple, the perceptron laid the groundwork for advanced neural networks, including multi-layer perceptrons (MLPs). Despite its limitations, it remains an essential concept in artificial intelligence.

0 notes

Text

CS3600 - Solved

Project 4: Neural Nets Introduction In this project you will be implementing neural nets, and in particular the most common algorithm for learning the correct weights for a neural net from examples. Code structure is provided for a Perceptron and a multi layer NeuralNet class, and you are responsible for filling in some missing functions in each of these classes. This includes writing code for…

0 notes

Text

All About ChatGPT & Advanced Prompt Engineering

OpenAI has been instrumental in developing incredible tools, like the GPT-n models and the OpenAI Gym, designed to optimize reinforcement algorithms. DALL-E, an AI model that can create visuals from text descriptions, is another breakthrough that has drawn much interest. OpenAI's ChatGPT is a noteworthy example of large language models, emphasizing even more of their outstanding achievements.

GPT-4: Unleashing AI's Prompt Engineering Power

Leading the chatbot revolution, ChatGPT provides responses that resemble those of a human while branching out into various industries, including corporate communication, software development, and poetry. GPT-4 has unmatched potential as a comprehensive information source in biology, law, and mathematics. It is poised to transform education, work, and creativity.

The generative capabilities of GPT-4 encompass text, graphics, audio, and video. As the user's "prompt" is the primary source of guidance for AI responses, prompt engineer jobs are essential to meaningful interactions.

The Key to Prompt Excellence

The importance of carefully thought-out stimuli cannot be overstated. They set the parameters for interactions with AI systems, guaranteeing precision and applicability. A poorly worded prompt could produce results that could be more accurate and useful.

To demonstrate this effect, let's contrast the following two ChatGPT prompts:

Badly formulated prompt:

"Tell me about Convolutional Neural Networks."

In response:

Convolutional neural networks are multi-layer perceptron networks, pooling and fully linked layers. Tasks involving picture recognition frequently make use of them. Using pooling layers, the input images are downscaled after filters are applied."

Clearly Stated Prompt:

"Although I've never heard of artificial neural networks, I'd like to learn more about convolutional neural networks. Please explain CNNs' application in image identification in layperson's terms and give an example.

In response:

"One kind of neural network mainly utilized for image identification tasks is the convolutional neural network or CNN. They process images using pooling and convolutional layers, unlike other neural networks. CNNs are remarkably efficient at recognizing objects because they can recognize various photo elements, like ears, tails, and whiskers."

This comparison highlights the critical role that AI prompt engineering plays in AI interactions by demonstrating how a well-designed prompt generates a pertinent and user-friendly answer.

Enhancing Timely Engineering Methods

Innovative methods like ReAct prompting, few-shot learning, chain-of-thought, and Tagged Context Prompts enable Large Language Models to perform at unprecedented levels.

1. Sporadic Learning

GPT-3 developed the concept of few-shot learning, transforming AI adaptability. Somewhat more adaptable than labor-intensive fine-tuning, few-shot models work well in various scenarios. These models can perform well on novel problems with very few instances. When presented with queries that do not require specific examples, they do exceptionally well in zero-shot learning settings.

2. React Initiating

Google invented the ReAct (Reason and Act) method, which combines task-specific actions and verbal reasoning trials to simulate human-like decision-making. This method improves accuracy and dependability by enabling models to verify their logic using outside data sources. It's a big step toward solving the problem of "hallucination" in AI systems.

3. Thought-Prompting Chain of Events

By taking advantage of LLMs' auto-regressive characteristics, chain-of-thought prompting encourages more deliberate idea development. By forcing models to explain their thoughts, this method ensures that outputs closely match actual data. It offers a methodical technique for explaining complex concepts guaranteeing understandable answers.

4. Labeled Context Questions

Tagged Context Prompts give AI interactions an additional level of context. Accurate context interpretation is directed for models by tagging information within inputs. This method encourages accurate answers by lowering the chance of hallucinations and depending less on prior information.

5. Instructional Adjustment

Models can follow precise instructions thanks to instruction fine-tuning, which expedites the zero-shot job execution process. This method revolutionizes prompting techniques, and novel task performance can be easily achieved. It has demonstrated encouraging outcomes in various applications, from intricate reasoning tasks to AI art.

6. STaR

An evolutionary method for improving AI models' capacity for reasoning is called STaR (Self-Taught Reasoner). It bootstraps the model's reasoning using iterative loops, gradually enhancing its capacity to produce justifications. Star demonstrates the potential for lifelong learning by performing very well in demanding tasks such as reasoning and math problem-solving.

Boosting Your Career with ChatGPT Certification and Prompt Engineering

There's a life-changing experience waiting for people captivated by the potential of AI-driven dialogues and ready to explore prompt engineering. The Prompt Engineer Certification Course makes possible access to a future where human-AI interactions are expertly adjusted for smooth, contextually rich exchanges.

Appropriate engineer certification programs offer an incredible route for those who want to influence AI communication in the future. Through these immersive classes, aspiring AI prompt engineers learn the nuances of creating prompts that elicit intelligent answers from ChatGPT and other AI models. Under the guidance of mentors with extensive knowledge of AI dynamics, trainees hone their abilities to surpass industry standards.

Benefits of Obtaining Certification

A Robust Base: ChatGPT Certification programs cover temperature, top P, context windows, and other essential prompt engineering components in great detail. Graduates shape interactions with AI as artists as well as engineers.

Mastery of AI Tools: Prompt engineers may stay ahead of the curve and take advantage of new opportunities in AI innovation by having firsthand expertise with state-of-the-art AI tools.

Growth & Mentorship: Tailored mentoring enhances the educational process by providing perspectives that propel abilities to unprecedented levels.

Professional Validation: In the cutthroat AI employment market, a certification boosts one's profile by attesting to one's proficiency and talent.

Unleash Your Versatility: AI communication optimization is driven by certified, prompt engineers and highly valued assets in various sectors.

Ethical AI: Classes strongly emphasize ethical AI techniques, ensuring prompt engineers lead AI with consideration and morality.

Accept the Influence of Timely Engineering

Prompt engineers define the narrative in a time when AI discussions conflate the virtual and the real. People certified in this art form can pursue occupations combining technical expertise, sensitivity, and creativity. The adventure involves more than just mastering AI. It's about responsibly using power.

The Blockchain Council's Vision for Decentralized Innovation: Pioneering Tomorrow

Leading the way in revolutionizing the Blockchain and AI fields is the Blockchain Council, a gathering of professionals and enthusiasts. They aim to empower various sectors by promoting Blockchain research, development, and understanding. The council envisions a decentralized future with global influence and bridges the gap between conventional systems and new solutions by providing comprehensive education. As a private company, they advance blockchain technology globally by providing AI certification and prompt engineering courses, which promote learning, awareness, and development in this exciting field of blockchain and AI.

In summary

The article discusses sophisticated, prompt engineering approaches, and OpenAI's ChatGPT demonstrates how AI is constantly changing. These approaches shape the development of AI, opening up new avenues for creativity, logic, and problem-solving. An array of fascinating opportunities for AI-human interactions appear on the horizon when problems such as knowledge conflict and hallucinations are addressed. We are on the verge of a new age in AI capabilities, one that has the potential to enhance work, learning, and creativity in a variety of fields through the ongoing improvement of quick engineering tactics.

0 notes

Text

Day 10 _ Regression vs Classification Multi Layer Perceptrons (MLPs)

Regression with MLPs Regression with Multi-Layer Perceptrons (MLPs) Introduction Neural networks, particularly Multi-Layer Perceptrons (MLPs), are essential tools in machine learning for solving both regression and classification problems. This guide will provide a detailed explanation of MLPs, covering their structure, activation functions, and implementation using Scikit-Learn. Regression vs.…

#classification#Classification vs Regression MLPs#deep learning#machine learning#MLPs in deep learning#neural network#Perceptron types#perpectrons

0 notes

Text

DD2437 Lab assignment 1 Learning and generalisation in feed-forward networks — from perceptron learning to backprop solved

1 Introduction This exercise is concerned with supervised (error-based) learning approaches for feed-forward neural networks, both single-layer and multi-layer perceptron. 1.1 Aim and objectives After completion of the lab assignment, you should be able to • design and apply networks in classification, function approximation and generalisation tasks • identify key limitations of single-layer…

View On WordPress

0 notes

Video

youtube

SmartBet AI Coventry vs Middlesbrough Forecast

This document outlines The Gambler's Ruin Project, a system powered by SmartBet AI Technology designed to predict outcomes for English Champions League football matches, specifically focusing on a fixture between Coventry City and Middlesbrough FC on March 5th, 2025. It details the AI's methodology, which integrates Poisson regression to forecast goals, Dynamic Elo ratings for team strength, and machine learning classifiers including Random Forest, XGBoost, and a Multi-Layer Perceptron (MLP) Neural Network to determine match winners. The report includes information on the match date, time, and weather conditions, as well as injury reports for both teams and a summary of the AI's predicted goals and outcome probabilities derived from Monte Carlo simulations. Finally, the document provides a disclaimer emphasizing that the predictions are for informational and entertainment purposes only and do not constitute financial or betting advice, along with warnings about responsible gambling.

0 notes

Text

DD2437 Lab assignment 1 Learning and generalisation in feed-forward networks — from perceptron learning to backprop

1 Introduction This exercise is concerned with supervised (error-based) learning approaches for feed-forward neural networks, both single-layer and multi-layer perceptron. 1.1 Aim and objectives After completion of the lab assignment, you should be able to • design and apply networks in classification, function approximation and generalisation tasks • identify key limitations of single-layer…

View On WordPress

0 notes